Automated Test

To guarantee that a virtual agent delivers the right answers to every question users might ask, Syntphony CAI allows you to test intents, documents, and questions and check if your virtual agent answers match what you expect.

Once a test scenario is created, you can run it multiple times, so the accuracy of your virtual agent can be checked every time a change is made.

For example, if the most important question the users have is the PLACE_ORDER intent, this functionality can show you if the accuracy for this intent has decreased, increased or if it is unchanged in the last training.

Important:

The automated test might generate additional fees

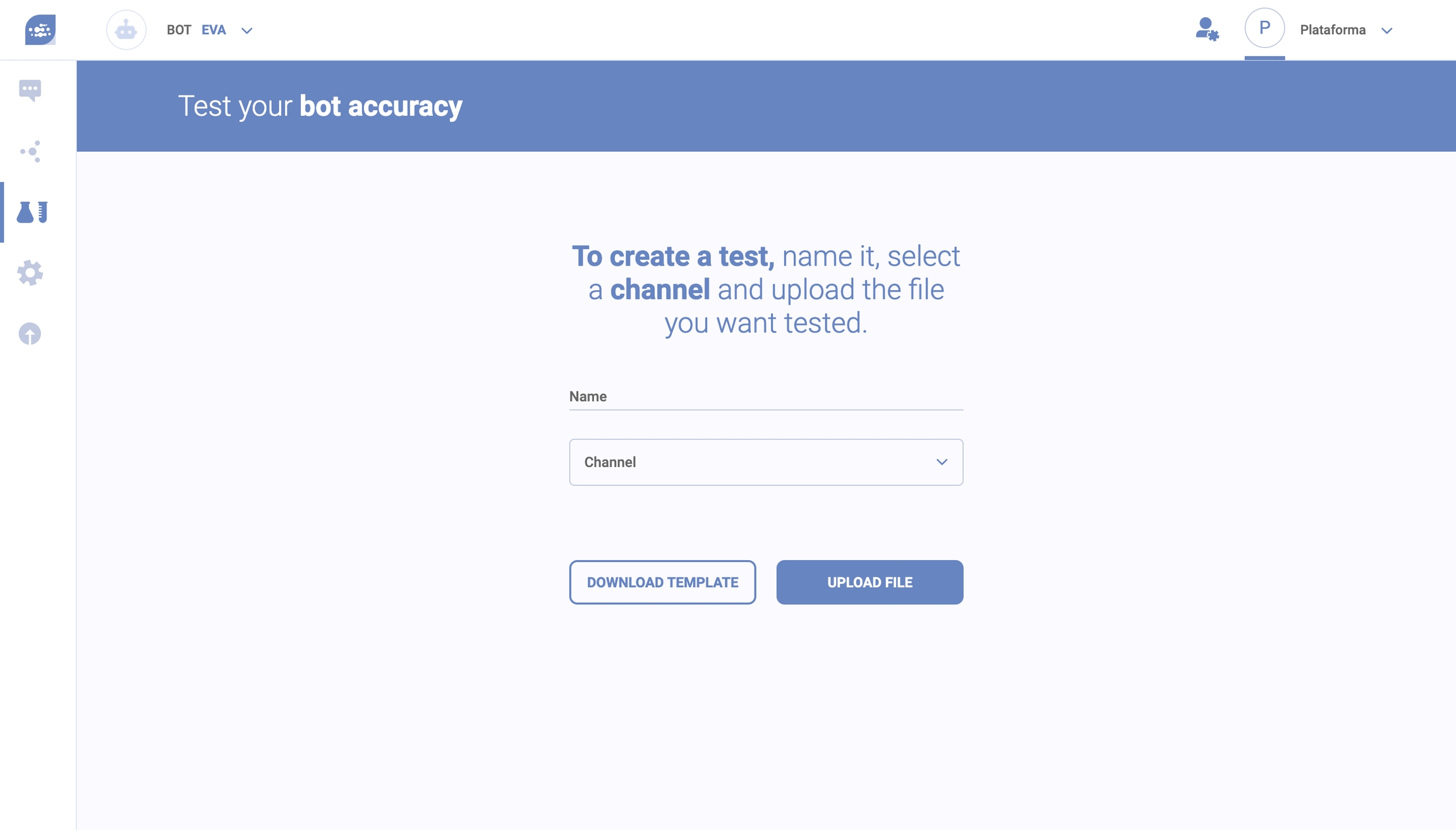

To test your intents, first, download the template to guide you on how you have to format the .xls file that you will upload.

Example of XLS file:

In this file, you should insert the component category, name, the example/utterance it should respond and the expected answer. If you wish, you can describe each component, but this is not mandatory.

Once you have the XLS file ready, upload it, name your test and select a channel.

Once the test is completed, you can see its results.

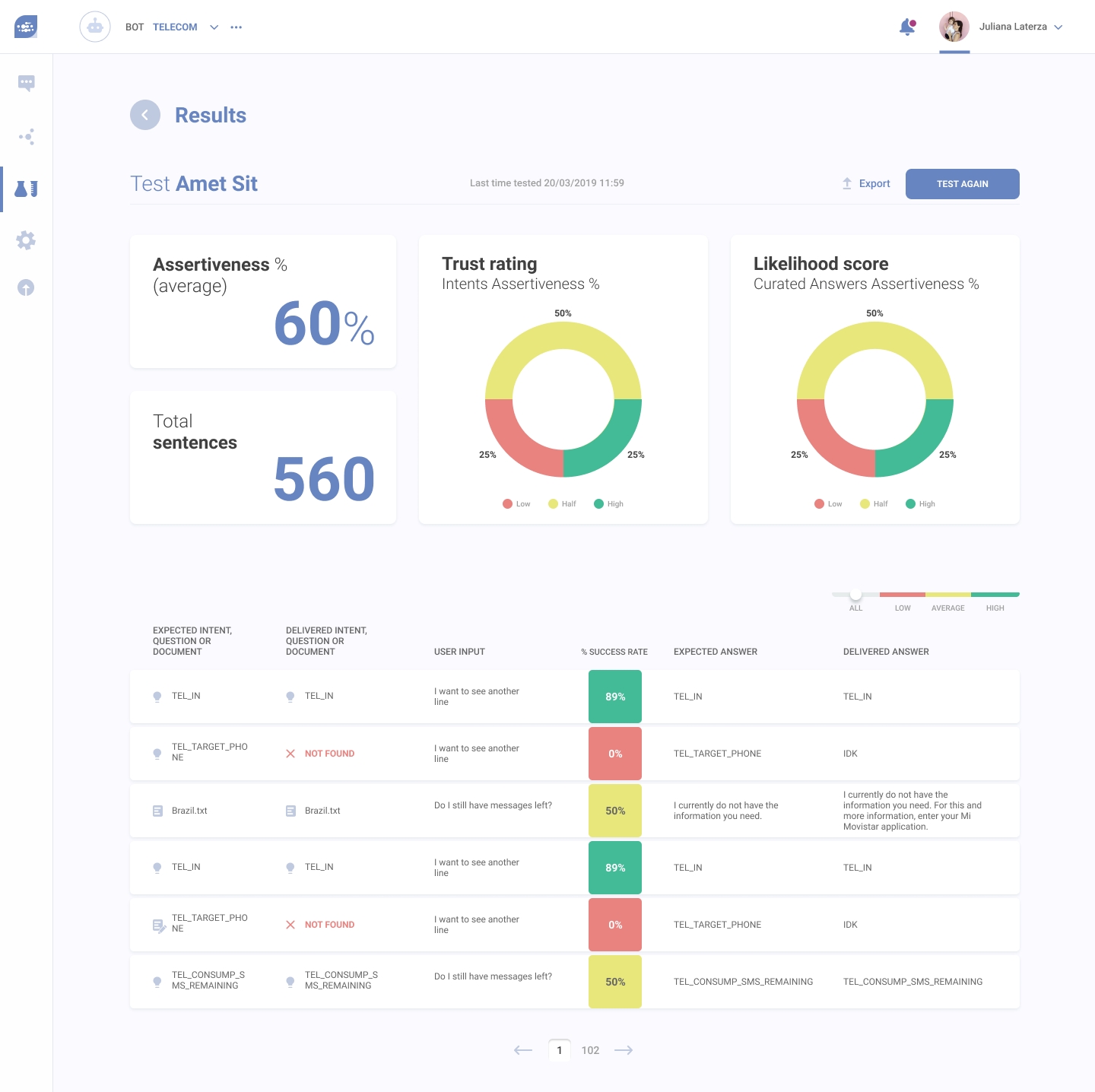

This screen shows the test results. Before you see how each component did individually, you see the general results.

The average assertiveness shows the percentage of times a virtual agent linked a user input to a component correctly.

The trust rating shows the percentage of times a user input was linked to an intent correctly.

The Likelihood score shows the percentage of times a user input was linked to a document or question correctly.

Below the general results, you can see how each component did individually.

Each line shows the expected component, the delivered component, the user input, the percentage of times the right component was linked to that input, the expected answer and the delivered answer.

A component that performed well will have an answer that matches its query. An average component might not have a matching answer, but it will have an answer. A poor component will have a wrong answer or no answer at all.

Every test is stored in the repository. There, you will see the test name, when it was last tested, the channel where it was tested and its general assertiveness. You can access them and test them again.

Last updated