What's New

July, 2025

We are excited to launch the first version of Syntphony Messaging Business Tool, a platform designed to centralize and streamline communication across multiple channels using a no-code environment. After months of development and testing, we're proud to deliver a solution that transforms how businesses connect with customers.

Here's what's new:

💬Messages

Our platform offers robust messaging capabilities, tailored to meet your specific communication needs. You can effortlessly send personalized messages to individual customers or reach out to multiple recipients at once with ease.

Direct messages: Engage with your clients on a personal level by crafting customized messages tailored specifically for them.

Broadcast messages: Efficiently communicate with multiple recipients simultaneously using CSV uploads or pre-defined groups to reach a wider audience without compromising on personalization.

With these comprehensive messaging solutions, you can optimize your communication strategies, ensuring your messages are not only effective but also maintain the personal connection that your clients value.

📋Contact & Group Manager

Efficiently create and manage contact groups tailored for targeted messaging campaigns. The Group Manager is designed to help you handle each contact with the utmost detail, providing a range of features to ensure organized and efficient management:

Information management: Keep comprehensive profiles for each contact with fields including name, email, phone number, address, etc.

Custom tags: Implement custom tagging strategies to classify and personalize contact management, enhancing the targeting of your messaging campaigns.

CSV import with field mapping: Simplify the import process from CSV files using field mapping capabilities, ensuring that all data is accurately integrated into your system. Customize the mapping to fit unique fields and specific data.

📲 Tech

Quick registration & WhatsApp Business API integration

Registering to partner with us is a streamlined process accessible directly from our website. This enables fast and efficient access to manage WhatsApp Business API functionalities. We offer flexibility and convenience with two easy registration options:

Embedded Sign-In: Utilize the embedded sign-in feature on our website for an expedited registration journey, seamlessly connecting you with comprehensive services.

Phone Number Sign-Up: Alternatively, opt for the phone number sign-up, offering a straightforward and secure method to complete your registration process.

This integration empowers you to enhance customer interactions and elevate your business communication strategies effectively. Embrace a seamless transition to a more connected business infrastructure with our user-friendly registration and comprehensive API access.

🛠️ Build

Our platform streamline the generation of customized WhatsApp links for specific phone numbers with pre-filled messages and unique tracking IDs for campaign performance. You can also create standard and advanced message templates (following Meta standards) with search, filtering, and preview capabilities

📊 Analytics

The Analytics section presents comprehensive insights with key performance indicators and platform metrics to facilitate informed decision-making processes. The dashboard is designed to offer a detailed analysis of the platform's metrics, helping to track performance effectively and adjust strategies as needed.

In Message History find complete conversation tracking, providing users with the ability to monitor every action within the conversation. It includes detailed resolution status, satisfaction scores, and channel distribution analytics.

Both reports can be exported and will remain available in the section Reports. You have the flexibility to choose from multiple format options, including CSV, PDF, and Excel.

⚙️ Administration

Manage communication channels and phone numbers with ease. This section includes everything you need to stay connected, such as the generation of web widget scripts. Seamlessly configure your communication sources and ensure that your organization is always reachable. You can also efficiently set up integrations with Syntphony Conversational AI.

June, 2025

We’re excited to unveil Agentics, the next evolution of intelligent automation within Syntphony Conversational AI. This groundbreaking release redefines how AI Agents are built — empowering teams to design, train, and deploy agents using intuitive prompts and minimal coding. With enhanced governance models and modular components, Agentics adapts seamlessly to your systems and workflows, helping you create agents that truly think, act, and evolve.

Here's what's new:

AI Agents

AI Agents

Syntphony CAI introduces a new approach that transforms the way AI Agents are built, with intuitive prompts and minimal coding requirements. This new release introduces concepts and components that accelerate agent creation and easily adapt to your current systems and workflows.

AI Agents differentiate themselves from traditional intent-based systems by enabling agents to make decisions and take autonomous actions based on instructions to achieve complex goals. This new version will offer four different types of governance for you to choose from based on your Project needs.

Key Components

Key Components

SCAI offers multiple AI governance models to align with different business needs and complexity levels. Choose between NLU for structured, intent-based interactions, Agentics for dynamic problem-solving capabilities, or Composite approaches that blend both methodologies for flexibility and control.

By means of a thoughtfully crafted prompts, you can coach the Supervisor and the team of Agents for specific use cases. A prompt-based design allows for more human-like interactions, enabling agents to switch topics easily, for example.

This modular approach involves creating intructions and guidelines for a Project containing a Supervisor who manages user input, analyzes requests, and delegates them to specialized Agents. These Agents understand context, learn from interactions, and tailor their responses to meet user needs, offering a more adaptable

Learn more about the prompt-based fields

The core building blocks of an intelligent conversational experience. Each agent is a finely-tuned entity designed to excel in a specific domain, equipped with unique skills such as Knowledge bases, predefined Action capabilities, tailored communication style, and security protocols.

Being goal-oriented, AI Agents possess a sophisticated reasoning capability that allows it to execute actions based on information provided by users. The following fields shape how your agent perceives itself and its responsibilities, influencing every interaction and decision it makes.

Learn more about AI Agents

Collections

Collections

This new release also brings to you the Knowledge Collections, a powerful new feature that transforms how you organize and access information in your agents.

Collections allows you to

End the sources chaos: Organize your knowledge sources by topic or needs, making information retrieval more intuitive and efficient.

Maintain context when reorganizing: Move sources between collections without losing valuable question connections.

Seamless updates: Refresh your knowledge base with the latest versions while preserving all existing connections.

Find what you need, faster: Get more relevant results with collection-specific searching that eliminates noise.

May, 2025

We're thrilled to introduce the first version of our Live Agent Console! This customer engagement platform brings together everything your support teams need to deliver exceptional service experiences for users using Syntphony Conversational AI.

Here's what's new:

✨ Live Agent Workspace

Live agents now have a dedicated command center for managing customer conversations! The intuitive interface enables seamless communication while providing powerful tools at their fingertips. Agents can effortlessly transfer conversations to colleagues or escalate to supervisors when needed. Quick responses make handling common inquiries easier, while the built-in supervisor chat ensures help is always available. Agents can easily manage their availability with customizable status settings (Online, Busy, or Offline) and track conversations through status categories (Active, Pending, Closed).

🚀 Teams Workspace

Take control of your support structure with our comprehensive Teams Workspace! Create specialized teams based on expertise, assign agents with just a few clicks, and configure intelligent routing rules to ensure customers reach the right experts every time. The management interface makes organizing even large support operations straightforward and efficient.

⚙️ Settings

Customize every aspect of your Live Agent Console with our flexible Settings module! Configure conversation timeouts and maximum assignments to match your team's capacity. Fine-tune agent capabilities for handling concurrent chats based on experience levels. Enable satisfaction surveys to gather valuable customer feedback, and build a library of quick response templates to boost efficiency and maintain consistent messaging.

📊 Dashboards

Gain visibility into your support operations with our Dashboards! Monitor real-time statistics including agent status and active conversation counts. Track critical performance metrics like resolution time. Visualize individual and team performance to identify your top performers and opportunities for coaching. Keep your finger on the pulse of customer satisfaction with detailed rating analytics.

We can't wait to see how the Live Agent Console transforms your customer support experience! This is just the beginning – stay tuned for more exciting features in upcoming releases.

February, 2025

List of improvements and bug fixes in this release:

Language Model Selection for Prompt Cells

The Prompt cell now includes a dropdown menu with language model options for integration, located within the Advanced Parameters module.

Available models:

GPT 3.5

GPT 4o

GPT 4o-mini

GPT 4.1

GPT 4.1-mini

Important: GPT 3.5 will be discontinued on July, 2025.

Websnippet

Adjusting the alignment of Carousel images

Bot parameters

Adjustment to the snack messages interface, allowing the area overlapped by multiple snacks, once closed, to be clickable.

Implementation of authentication in Webhooks and correction of the use of OAuth in services

Resolution of SSL connection problems between MS Java and Redis

Inclusion of the possibility of using debug log with Azure Service Bus

Analysis and correction of vulnerabilities pointed out by Sonar and Trivy

December, 2024

Multilingual Agent (beta)

We are excited to introduce the new Multilingual Agent feature, designed to elevate user experience and expand accessibility. With this capability, virtual agents can now understand and respond in multiple languages seamlessly. This enhancement eliminates the need of creating separate agents for each language, allowing users to interact in their preferred language effortlessly.

Whether your audience speaks English, Spanish, Japanese, French, Thai, or any other supported language, the Multilingual Agent ensures a consistent and personalized interaction across the board.

Logs viewer

The new version release brings to you a Logs viewer in the dialog simulator, designed to empower developers with greater visibility and control over conversation flow. This tool provides real-time insights into conversation execution, making it easier to troubleshoot and optimize agent performance.

With the Logs viewer, developers can:

Access real-time visibility of any service errors during simulations

Review detailed step-by-step execution for each conversation

Use advanced request options to specify users and input values for targeted testing

List of improvements and bug fixes in this release:

Answers repository

Application of rich text listing styles

Dialog simulator

Full conversation update with library components

Gen AI cell

Cell has been renamed "Prompt cell"

Menu

Adaptation to keep main menu expanded when selecting a submenu

Notifications

Blank space removed in Notifications with minimal content

Websnippet

Inclusion of accessibility in buttons for visually impaired users

Layout adjustment for cropped images

Answers repository

Layout adjustment in response registration field with an out-of-standard frame

Rephrasing filter adjustment in answers allowing it to be reset

Review of persistence flow when editing a carousel button

Dialog simulator

Review of action type editing flow in response templates that were not persisting

Adjustments to the display of the "typing message" indicator

Text formatting options adjustment

Channels

Title change in the edit modal

Dashboards

Time correction for messages

Dialog Manager

Layout correction for modal display on existing cells

Entities repository

Error message adjustment when saving an entity without "value name"

Persistence flow adjustment for entities to block the inclusion of records with empty spaces

Text area layout adjustment in entity registration

Gen AI (Prompt) cell

Tooltip alignment layout adjustment

Intents repository

Adjustment in the search functionality behavior, updating the screen after deleting search characters

Knowledge AI

Displaying the "Create question" button even when no results are found during the search

Websnippet

Scroll button visibility adjustment

Code Maintenance

Removal of code validation in rule and code cells during flow execution

Removal of deprecated endpoints and methods across all projects

Dependencies

Removal of eva-channel dependency on eva-infobip and eva-automated-tests

Library Updates

Replacement of the @Schema annotation from the Swagger library

Replacement of the @GenericGenerator annotation with @UuidGenerator

Project Migration

Migration of eva-cockpit-v2, eva-cockpit-v2-lib, and eva-cockpit-websnippet projects to Angular 17

Security Vulnerabilities

Analysis and remediation of vulnerabilities identified by Sonar and Trivy

August, 2024

List of improvements and bug fixes in this release:

Dashboards

Layout tweaking when selecting many tags in a funnel step

Knowledge

Training button has been restricted to the Knowledge page

Login

Improvements to the rerouting flow on the login screen

Training

Permission to change intents/entities during training implemented

Training button has been hidden when there is no new content to be trained

Webchat plugin (websnippet)

Additional open context parameters have been added

Knowledge

Error message when importing documents fixed

Adjustment made to avoid overlapping messages when deactivating Knowledge

Login

Redirection after logout has been adjusted

Display of errors when trying to log in with organization as parameter fixed

Training

Layout of the dialog simulator on the training screen fixed

Webchat plugin (websnippet)

Documents are now displayed as links instead of text

Page scroll adjusted to remain enabled after chat is closed

Secret expiration when chat is closed has been prevented

Images in Firefox have been fixed to avoid cropping

Scroll button adjusted to not disappear

Open context maintained in all flows

Knowledge

Deprecated automated-learning-related endpoint removed

Analysis and Vulnerability fixes

June, 2024

We are happy to announce the latest updates, designed to enhance user experience and strengthen data security. These new features include advanced data protection, seamless integration with Azure Open ID, enhanced voice channel configurations, an improved user interface, and expanded channels options.

Data Masking

To enhance the security of PII (Personal Identifiable Information) data within the platform, we're introducing a feature that activates data masking for the virtual agent. This feature reduces the risk of data breaches and enhances compliance with privacy regulations.

Integration with CISCO VXML

The new integration with CISCO VXML and Syntphony Conversational AI enhances the operational efficiency of Contact Centers. This integration leverages the sophisticated telephony and contact center capabilities of UCCE, combined with the intelligent automation features of Syntphony CAI. Additionally, users can now configure phone numbers for voice channels like VXML directly from the channel library.

Login with OpenID

Users can now log into our platform directly from their Azure organization (if enabled), providing a seamless and secure authentication process. This integration supports single sign-on (SSO) capabilities, reducing the need for multiple passwords and simplifying user management for IT administrators.

![]()

Knowledge

We are introducing enhanced contextual understanding in Knowledge.

These new improvements allow the system to consider previous interactions, providing more accurate and relevant answer to end-users. You can set the number of past interactions to be taken into account, ranging from 0 to 5, ensuring that follow-up questions are understood in context.

Voice Gateway

Now you can set up default error handling, timeout configurations, TTS (text-to-speech), voice menus, DTMF menus, and voice handover settings for smooth transition calls to human agents when necessary directly from the interface.

Integration with CISCO VXML

The new integration with CISCO VXML and Syntphony Conversational AI enhances the operational efficiency of Contact Centers. This integration leverages the sophisticated telephony and contact center capabilities of UCCE, combined with the intelligent automation features of Syntphony CAI. Additionally, users can now configure phone numbers for voice channels like VXML directly from the channel library.

Notifications

We're introducing a mini product center to keep you up to date with the latest features and events, so you don't miss out on important updates!

New Navigation Systems

Menu

Experience a whole new way of navigating through eva. We're introducing a new navigation structure to help you navigate through the sections and find what you need faster with new sections and a more logical clustering, and the option of pinning your most accessed and/or favorite sections on top of the menu.

Channel Library

Another significant improvement made was in the Channels section navigation! With this update, we've restructured the library to enhance usability and intuitiveness, ensuring that users can effortlessly find the integrations they need.

Plus, we have added new channels to our library:

Wechat

Kakao

Line

Instagram

Amazon

Connect

Genesys

Odigo

Twillio

Infobip Conversations

Naka

Digital Humans

Slack

List of bug fixes and other improvements in the June release:

Parameters

Bug resolution on the Parameters screen (env and bot).

Error when registering environment parameters corrected.

Content type body validation and rest connector cell output adjusted.

Sliders changed via input.

Snack message after parameter slider change corrected.

Training

Training status bar adjusted to be behind the menu.

Activation of the training button after document removal.

Rest Connector

Problem with editing rest connector with key/value fixed.

Tooltip in the body of the rest connector displayed correctly.

Websnippet

Switch enable/disable corrected.

Source adjusted to reflect on the site.

Text URL error resolved.

Images now render correctly.

Smartphone styles corrected.

KnowledgeAI

KAI training page adjusted.

Hover message on create question button fixed.

Remove duplicate image button set.

User List

User screen repositioned correctly.

Remove duplicate image button fixed.

Dropdown of list options adjusted.

Login

Automatic logout after inactivity fixed.

Flows Repository

Drop down flow creation adjusted.

Title of the user journey flow modal corrected.

Answers Repository

Template files aligned correctly.

Response modal buttons aligned.

Snack

Hover message in the dropdown of the "Create bot" screen adjusted.

Snack message after slider change fixed.

Parameters

Adjustment in the registration of synonyms in the incorrect field.

Adjustment to the enable/disable switch when closing the confirmation modal.

Training

Adjustment to the column name in the training list for non-clever bots.

Entities Repository

Adjustment in the registration of entities with blank fields.

Adjustments to the entities screen for viewer users.

Improvements to the filter refresh entities screen.

Data visualization of saved entities (Watson/DialogFlow) corrected.

Rest Connector

Improved authentication with RestConnector.

URL validation for audio and image responses adjusted.

Websnippet

Angular Material version update

Create Bot

Bot name in the adjusted language field.

Channel modal maintained correctly after change.

Registered image preserved when editing bot.

Dashboard

Inclusion of seconds in conversation and message reports.

Tag funnel configuration adjusted on the create funnel page.

Login

Logout adjusted after closing the NPS modal.

Answers Repository

Quick reply registration adjusted.

Zero Shot

Zero shot entity parsing adjusted.

Automated tests for LLM bots created.

Adjustments to prompt and intent post-processing to reduce content filter errors in zero shot bots.

Voice Gateway

Genesys Interaction ID for Conversation Tracking added

Improvement in audio recognition, through a circular audio buffer for Speech to text

Custom codec configuration via DNIS

G722 codec support

Revision of the cockpit NPS micro.

Change in the logs of lib eva-adapter-security-checker.

Spring version update.

Removing deprecated methods from eva-rest-client.

Resolution of the caching problem (eva-web).

Standardization of all types of chat logs (flows and cells) to include specific data.

Improved logs for Jaeger (eva-technical-log-lib).

Correction of endpoint permissions (eva-web).

Saving the name and UUID of the flow in the user interaction.

Implementation of refresh scope in AI services

Removal of the last 5 user messages from the session table and caching (eva-broker).

Improvements in connection maintenance with the messaging service.

Implementation of the NPS service.

Vulnerability analysis.

Adjustments and revisions to the structure of AI unit tests for integration with Sonar.

Implementation and correction of the AI SAST structure.

Adjusting Sonar permissions in AI service pipes.

Creating users in RabbitMQ via HELM for IA services.

January, 2024

After months of dedicated work, our product team is thrilled to unveil a wave of transformative features harnessing the power of generative AI technology. From adding dynamism to conversations, to assist you in crafting and enhancing text effortlessly. Dive into the capabilities that will help you in a more efficient and advanced conversational experience.

Find out what's new in this latest release:

![]()

Zero-Shot LLM Model

The Zero-Shot classification is a task that enables the model to classify intents during runtime, even if they have not yet been trained, using semantic similarity.

This feature makes use of pre-trained language models from LLM (Large Language Model) and OpenAI to assist the engine identify relevant intents without the need for explicit training utterances, significantly simplifying and reducing the process of training your virtual agent.

Rephrase Answer

Empower your virtual agent's answers with real-time rephrasing! Enhance user engagement by tailoring responses based on context and emotions for a more natural conversational experience.

Assist Answer

A new feature in the Answer Cell that makes it easier for conversational designers to create or enhance answer with the help of generative AI. You can generate text based on a simple instruction or with one single click: expand, reduce, or improve text, fix spelling and grammar or change tone. Available in the text template for all channels.

Knowledge

A solution that transforming documents into a structured and easily accessible content. Knowledge AI doesn't rely on conventional intent-based model to identify user questions to provide answers, which makes it ideal for FAQs, product descriptions, institutional content, manuals, chit chat, etc.

You can upload a TXT or a PDF file to extract insights for your virtual agent. It has the ability to read images with text (except illustrations), update the file while retaining all questions previoulsy linked to the document, and track user journeys through tags.

![]()

Extensions

New improvements were made available to be enabled/disabled in this section — Prompt cell, Rephrase Answer and Assist Answer.

Parameters

New thresholds parameters added to this release to configure the request timeout behavior of the Generative AI services.

October, 2023

Web chat customization

Integrate conversational AI into your website, app and mobile channels. Whether you want to enhance customer satisfaction or simplify user interactions, Syntphony Conversational AI enables you to create a personalized webchat solution that perfectly matches your distinct brand identity, ensuring a dynamic user experience.

Examples Generator (beta)

Speed up your knowledge base creation process by automatically generating a list of context-related utterance examples for each intent with this exciting feature.

The Example Generator empowers writers to quickly generate multiple sentences using the provided context. By effortlessly creating sample utterances for your intents, you'll turbocharge the training process, making it faster and more efficient than ever before.

Dashboards - Funnel charts

Open the power of Funnel charts in your Dashboards: gain insights, make data-driven decisions, and optimize user experiences effortlessly with valuable insights about your conversations. The newly added section to our Dashboards will help you better understand the conversation journey, drop-off points, and A/B testing.

Add extra features to enhance performance

We've added the new section, Extensions to enhance your virtual agent's capabilities. A variety of advanced features can be enabled with a single click. Stay tuned for upcoming features.

Filter by tags

Introducing a new filtering option in Dashboards, leveraging tags added to cells and flows in the Dialog Manager. This feature offers a precise way to analyze specific scenarios, simplifying the performance analysis of your virtual agent.

Audio Interactions

Our platform is equipped to understand audio when users communicate through channels that support audio recordings. This feature allows you to engage with users via audio interactions, enhancing accessibility. It's designed to work across all audio-compatible channels.

Refer to the API Guidelines to learn how to integrate it.

Trial Accounts

Trial accounts created in the Try Syntphony Conversational AI environment offer a seamless transition to a production upgrade with just a single click. This can be accomplished by purchasing a license, enabling team members to retain all the content they've diligently crafted during the trial period.

August, 2023

Prompt cell (beta)

The recent rise of Large Language Models (LLM) technologies, such as OpenAI's ChatGPT, has unveiled a remarkable potential in harnessing the power of NLP. The new Prompt cell will empower you to unlock all this potential with its awe-inspiring transformative capabilities.

This new cell for generative content is a versatile tool with many use cases. In this cell, you can enter a prompt, which may use any existing parameters or user input's text as part of it, to process inquiries, create answer variations, and format your inputs into specific formats.

To help you understand how it works better, we recommend accessing its dedicated page, which provides a brief and detailed explanation of its features and how-tos. In summary, you can:

Rewrite texts for your answers

Process your user's input and store it in the format of your choice, such as JSON or other technical structures, based on your specific requirements.

Engage in freeform conversations with the language model by utilizing user input for inquiries.

Infer intents and needs regardless of the NLP's configuration, allowing for diverse, generic zero-shot integrations with Not Expected flows redirecting to the appropriate flow based on user text parsing.

Generate tailored texts based on available or missing user information.

Validate inputs, make sure they are in the correct format, and display text with only specific fields.

Literally anything a LLM tool can provide you with.

Rest Connectors

We've added yet another cell that will allow you to integrate literally any API you need, the Rest Connector cell.

Previously, you could use Transactional Service Cells to make requests to a Webhook of your own, which allowed you to some extent integrate submissions of data into your webservice through headers. Now, this new service cell comes with an integrated authentication step, allowing you to use any of the market standard authorization types to proccess any type of request.

Agent Templates

A new Agent Template was added to our list. These are pre-built and ready-to-use virtual agents to help establish a base for building conversations for Airlines, a collection of 19 flows focused on travel services in 3 languages: English, Spanish, and Portuguese.

March, 2023

Dashboards

This release includes new dashboards with sections for User messages, Conversations, and Reports.

Among others, the new dashboards bring data and gather insights about:

Full Conversations

Message details

Confidence score

The NLP and/or Knowledge AI (Automated Learning) response

Satisfaction

Duration

Channels

The Voice Gateway ("VG")

The new evg-connector allows you to create voice agents within Syntphony CAI, that means that no external platform is needed. Now you can easily implement and automate virtual agents using text and audio answer templates in Dialog Manager, integrated to a voice channel.

Amazon Lex Integration

We have added another NLP to our list! If your knowledge base is based in Amazon Lex, you can integrate it to our platform to create flows and manage all the user conversational journey.

OpenAI’s GPT-3 integration

Syntphony CAI is using this powerful new tool so you can improve the way you manage the Not Expected answers and deliver much more dynamic and accurate answers in real time, giving users an amazing experience and speeding up the creation of your conversations.

Improvements in Welcome and Not Expected flows

Improvements in Welcome and Not Expected flows that offer new possibilities according to the channel being used. You can add new cells to these flows to, for example, segment different user groups using rule cells and deliver a different welcome message for each group. You can also use rule cells to set your virtual agent to deliver different Not Expected answers for different segments of customers. Read more about all the possibilities.

Agent Templates

A new Agent Template was added. These are pre-built and ready-to-use virtual agents to help establish a base for building conversations for C-commerce, a collection of 19 flows focused on e-commerce services in 3 languages: English, Spanish, and Portuguese.

Improvements in Code and Rule cells

Branch, enable and disable your flows using Code and/or Rule cells.

November, 2022

Improvements in Conversation API added to this version:

New instance API conversation endpoints

New Infobip, Google Assistant and Facebook API endpints

New error codes in instance conversation API

October, 2022

Dashboards

A new Dashboard feature is available, providing key metrics that will help you analyze if the virtual agent is successfully performing and achieving your business goals. With this new feature, Syntphony CAI gathers and charts specific data and easily custom them the way you want.

The new Overview section includes the following:

Metrics fot total conversations, total messages, total of users, percetage of accuracy, top 10 intents, and top 10 flows.

A comparative data from the previous period, so you can quickly see how the virtual agent has been performing.

Quick-filters to switch the charts data visualization

More details and specifics such as occurrences by channel and total executions on the period by just hovering the bars and lines on the charts

Filters by period (analyzes data up to 12 months) and by channels

Export your dashboards as PDF

Sort and Pagination

For a better experience, we added new ways of navigating on the repositories. Now you can sort items by name, modification date, or type. This is also useful to help you search using this filters.

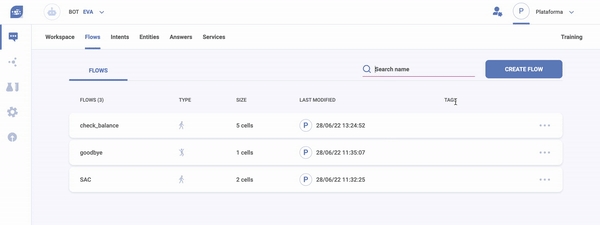

Other possibility added to this release is pagination, to help control how many items are displayed per page. Choose if you want to see from 50 up to 100 items on the Flows, Intents, Entities, Services, and Answers repositories.

Improvements when Importing and Exporting Virtual Agents

In this new release, we bring some improvements in the way you import your virtual agent: now you can choose if you will import it with a new ID or if you want to keep the same ID from the previous environment.

In the latter, it’s like moving the virtual agent from one environment to another (from dev to prod, for example), without the need of creating a whole a new agent every time you change it in a different environment.

You can also update (replace) an existing version, updating all changes made in parameters, channels, workspace, repositories, and Knowledge AI, or restoring a backup.

We also added a new shortcut in a pop-up menu to import and export and update the virtual agent directly on the main page.

Improvements in Knowledge AI (previously known as Automated Learning)

Now you can add questions to disabled documents in Knowledge AI and choose if you want to activate or leave them deactivated.

Dashboards: Release of the new Dashboard - Overview, Syntphony CAI gathers and charts specific data you need, and easily custom data the way you want to see.

Sort and Pagination to give the user a better navigation experience on all repositories

Import and Export improvements: Ability to choose between importing the virtual agent as a new one with the same or a new and unique ID, or to update (replace). This option won’t change the ID.

Knowledge AI improvement: Allows creating questions in disabled documents.

July, 2022

Organizations and Environments

Syntphony CAI brings a solution that will allow users to manage Organizations and Environments on the same page to bring more operational efficiency. Now you won't need to open different pages in the browser with different login accounts.

This also means more flexibility to create different Environments (dev/test/prod, for example) within these Organizations, according to the project strategies. At the permission level, Admins can also set different user access levels and define their roles for each environment and the virtual agents therein: in other words, the same user can be editor in environments A and B and a viewer in another environment C, for example.

In practical terms, it helps reduce time to market, as you’ll also be able to quickly perform the deployment process and speed up updating to new versions.

Agent Templates

New Agent Templates were added. These are pre-built and ready-to-use virtual agents to help establish a base for building conversations for Help Desk (a collection of 21 flows focused on ticketing services) and Telco (collection of 25 flows focused on Telecom services).

Search within the Dialog Manager repositories

Searches for specific cells (intent, entity, answer, service), flows, AL documents or AL questions through extensive lists on the repositories in Dialog Manager, by typing the name of the item on the search bar.

Profiles and roles

We have updated the profiles and roles definitions to better respond to our users' needs. From two types in the previous version, we have now five different types: owner, admin, supervisor, editor, and viewer. The idea is to allow a better understanding of the roles of each user in each project and, thus, define their access levels and permissions across all Syntphony CAI resources. See new definitions.

Our platform is equipped to understand audio when users communicate through channels that support audio recordings. This feature allows you to engage with users via audio interactions, enhancing accessibility. It's designed to work across all audio-compatible channels.

Last updated